Hello un-prompted viewers, it's been a little while.

I plan to upload a little more frequently, especially as thesis things start ramping upwards and forwards, but I would like to share some projects and some technologies that I've discovered and worked on that are in this realm of AI, generative form, and interactivity.

I mentioned in a previous post that an aspect of my thesis would explore the interaction of generative audio and generative visuals. The projects and technologies in this post reflect that sentiment.

First on the technology side, I have been exploring the integrations of AI audio in a realistic way using OSC data that can be utilized in TouchDesigner for audio reactive visuals. At first I wanted to write some type of python script that could continuously generate audio which could be fed into TD, but technologically that is somewhat beyond me and involves a lot of setup in previous python environments that in the end I decided would take away from the final output. If I can achieve the result I want within a program, that works just as fine.

So for program explorations, two options come to mind: Ableton and VCV Rack. Both have the ability to make traditional generative music, particularly ambient sounds which was first envisioned and created by Brian Eno in the 1990's. Both also have AI integrative plugins for more extensive generative audio, and fit more into the idea of what AI is post 2023.

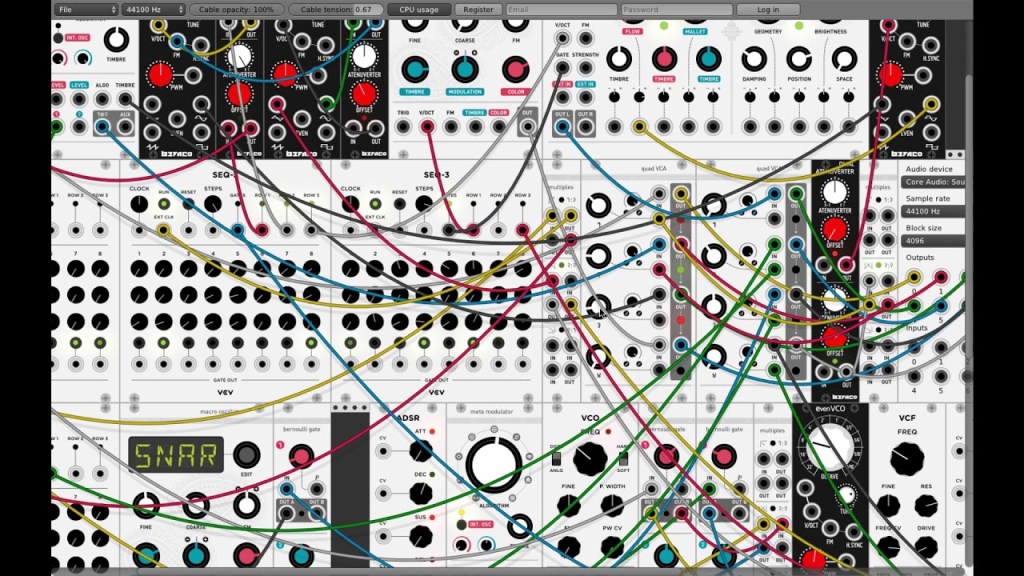

Of course there are pros and cons to each platform. Monetarily, Ableton is paid and VCV Rack is free (there is a paid version but to my knowledge right now most everything is achievable in the free version). The Ableton user interface is a bit more digestible and is inherently integrated with TouchDesigner through TDAbleton, compared to the VCV Rack interface (above), which takes inspiration from classic node-based audio synthesizers, and can get pretty chaotic. VCV Rack also does not have native integrations with TouchDesigner, but again through OSC data and specific plugins this is made easier.

I'll need to do more explorations, but at the end of the day I will choose which one can more effectively integrate newer AI audio generation with the traditional audio generation systems.

PROJECT CONCEPTS

Aside from more technological explorations, I have made some demo projects that integrate AI, Audio, and some motion/facial tracking.

The first of these projects is shown below and was made in TouchDesigner with the StreamDiffusion and MediaPipe plugins, and I refer to it as "Emotive-Reactive AI".

The project tracks the users face as shown by the 3D point map, and using the corners of the mouth for 'happiness' and the brow for 'sadness', the user controls the prompt weights for each side, showing calming and pleasant images when the user smiles, and bleak solemn images when the user furrows their brow. The prompt for each emotion is also randomly taken from a prompt list when the users emotion reaches a certain threshold, which accounts for the change of image. Finally the input media is taken from a solid facial model tracked from the users head, with a noise filter overlayed in order to fill the entire block.

Expanding on this I created a variation that incorporated pre-generated AI audio tracks for each emotion, that similarly increase in volume with the intensity of the users emotion. Changing from the landscape scenery from the first iteration, the AI takes primitive abstract shapes as an input, and meshes them together after passing it through the model.

Additionally, I explored more on the visual side with audio from some musicians I know, and creative some semi real-time audio reactive AI visuals, through straight audio inputs, and a system that can be manipulated with a midi controller, in my case the LaunchControl XL.

These were great explorations for me to get started on actual deliverables for my thesis, and to see what's possible and what's not with the programs, plugins, and techniques chosen.