What I Made

This week as most of us know was for our big presentations, so that is where a bulk of my making went, through slides and formatting. In the making of the slides I feel like I had a chance to think in a different context about my work. It hasn't been so much in an isolated circle, but to formally present to those with no context definitely had me thinking more critically about what words go where, and how to convey certain concepts for the best understanding. Before any feedback while I was still developing the slides, I was going back and forth between the words of play, creativity, interaction, experimentation, and experience, and what they actually mean. I think from where I was in the beginning of the semester, these words, their definitions, and what they mean in the context of my thesis are making much more sense, but there is still more to be done in that area. Drawing from Tina and Silvia's feedback, I have done a lot of thinking on what my audience should be, but what I want them to feel is something I'll have to think about more: where play fits into understanding and learning. I think the title "Playful Interaction" steps into a right direction, where it's not entirely play, and places concepts like participation and experience tangentially with the concept of what play is, so maybe play as a word will make less and less appearances in my thesis from this point forward.

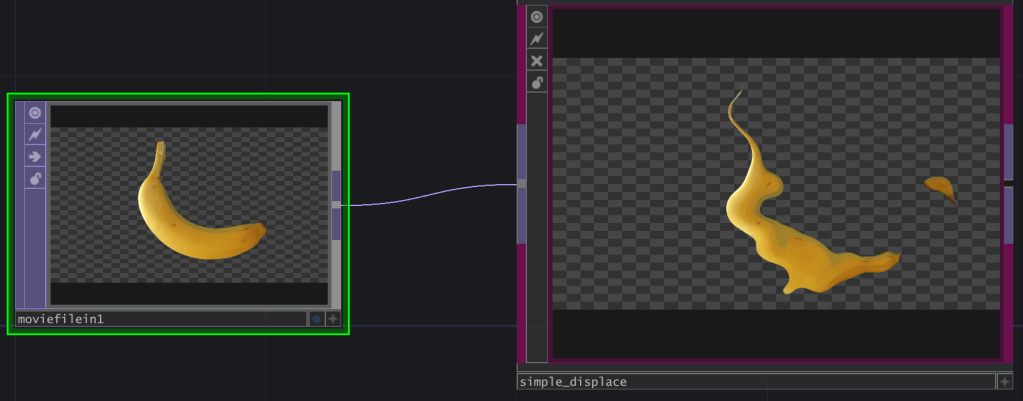

Before I step into Rao's feedback, I'll briefly cover some more applicable "things" I've made for the thesis: these tools for the workshop. The idea behind them is to make TouchDesigner more accessible so there's less development and learning of the program itself, and more time to focus on learning an AI workflow and fast-tracking results. I've been building some tools and gathering some from free open sources, with the idea of being able to utilize them in either the pre-processing and/or post-processing areas of my flow-chart. These tools include now but are not limited to: a mouse drawing tool, machine vision made easy for face / hand tracking, filters made easy like halftones, chromatic aberration, displacement and noise, fundamental "mini-scripts" that are essential for movement in TouchDesigner (absTime.seconds()/absTime.frame makes an object move with the seconds passed or amount of frames), drag and drop sine and cosine waves, and a poster tool that makes text, shapes, and borders easy to add for an easy composition. There isn't too much to show of these tools as they all take the appearance of TouchDesigner node blocks, but I'll include screenshots.

From mostly Rao's feedback and some of the other comments, I think that there is some good things to address here. Mainly for quantifying what people take away from AI literacy in the context of the installations. I think from the interview and workshop protocols there is grounds to gauge what working practitioners have gained literacy wise from working with AI, and what those with less experience stand to gain through the workshop, which maybe I could have articulated better in my presentation. This is a possible area of concern for the installations though, as currently there is no metric for what people gain literacy wise from viewing and interacting with the pieces. Perhaps this could be an added leg of research, doing something similar to what Mira Jung did with her soil research, having groups of participants view and interact with her pieces, then answering a questionnaire to see if there was any knowledge gained. I feel like I would have to decide this pretty soon though, since the installations themselves would have to be finished by the end of the semester, a space to set them up would have to be decided, and IRB would have to be approved. I think it would be valuable for my thesis, but I'll have to think a little bit on the next best steps.

What I’ve Read

Continuing my readings in Transcending Imagination, Chapter 8 treads towards more thought experiment and philosophical ideating, with topics like sentience and emergence, which are relevant topics for AI but aren't particularly relevant with current issues with AI, and what my thesis is going towards. He does make a distinction which I air towards in my presentation, the separation of technical processes vs. analytical critical processes. In the case of sentience he talks about embodied functions, like mathematical and logic-driven operations, and emergent functions, such as judgment, pattern recognition, or aesthetic perception. He says that sentience is less about super-intelligence and more about the capacity of AI systems to produce outcomes that evoke understanding, reflection, or emotion in human observers. He then introduces this concept of autopoietic intelligence, which describes a self-maintaining system, and draws more parallels to biology and evolution/adaptation.

I don't think I'll cover this AI sentience in my thesis, as I want to keep the focus on the people who use AI and how to increase their knowledge perceptually. Sentience is still also a very conceptual thing, and fits into the hype/hate cycle as a kind of unknown. Unknown if it would even be possible, from the sheer resources required or otherwise, but I don't think it's particularly important in the here and now.

Where the Next Steps are Heading

After this week the next steps are to keep working on the installations and reach out to interview candidates. I set up a calendly to get times scheduled and make the process easier on them, so hopefully I'll have some scheduled or at least the beginning of conversations coming into the next week. For the installations the first step is to work on getting the network set up for non-sentences. Once I have that, I think I will pivot to the Emergent Garden and getting the hand-tracking and gesture as interface aspects of that working for a live installation setting.

Leave a comment